Efficient caching system -

Mastering the fundamentals of cache for Systems Design interviews is crucial since this knowledge will be required very often. The cache will significantly increase performance when designing your systems, even more nowadays when systems are in the cloud.

Also, the cache makes an even more significant difference when there are many users. If more than 1 million users access part of the system, the cache will make a massive difference in performance. Eviction cache policies determine which items are evicted or removed from the cache when it reach its capacity limit.

Here are some commonly used eviction cache policies:. LRU Least Recently Used is a caching algorithm that determines which items or data elements should be evicted from a cache when it reaches capacity. The idea behind LRU is to prioritize removing the least recently accessed items from the cache, keeping the most recently accessed items in the cache because they are more likely to be reaccessed.

We widely use the LRU Least Recently Used caching algorithm in various applications and scenarios where efficient caching of frequently accessed data is crucial.

Here are some everyday use cases for LRU caching:. Web Caching : LRU is commonly employed to store frequently accessed web pages, images, or other resources in memory. Keeping the most recently accessed content in the cache reduces the load on backend servers and improves response times for subsequent requests.

Database Query Result Caching : In database systems, we can use LRU caching to cache the results of frequently executed database queries. LRU helps avoid repeated database access and speeds up query response times.

File System Caching : File systems often utilize LRU caching to cache frequently accessed files or file blocks. CPU Cache Management : CPUs employ LRU-based cache replacement policies to manage the cache hierarchy effectively.

The CPU cache can minimize cache misses and improve overall processor performance by prioritizing the most recently accessed data. Network Packet Caching : We can use LRU caching to cache frequently accessed network packets or data chunks in networking applications.

LRU can enhance network performance by reducing the need for repeated data transmission. API Response Caching : APIs that serve frequently requested data can benefit from LRU caching.

Compiler Optimization : During the compilation process, compilers often employ LRU caching to store frequently used intermediate representations or symbol tables. LRU can speed up subsequent compilation stages and improve overall compilation time.

These are just a few examples of using LRU caching in various domains and applications. The primary goal is to reduce latency, improve response times, and optimize resource utilization by keeping frequently accessed data readily available in a cache.

Video Thumbnail Caching : YouTube could utilize LRU caching to store and serve frequently accessed video thumbnails. Thumbnails are often displayed in search results, recommended videos, and video player previews. Caching the most recently accessed thumbnails can significantly reduce the backend systems load and improve thumbnail retrieval responsiveness.

Caching recommended videos can improve recommendation response times and reduce the computational load on the recommendation engine. User Profile Caching: Instagram could cache frequently accessed user profiles to improve the response times when displaying profile information, posts, followers, and other profile-related data.

Caching user profiles allows for faster retrieval and reduces the load on backend systems, enhancing the user experience. User Stories: Instagram may cache user stories, including images, videos, stickers, and interactive elements.

Caching stories helps ensure smooth playback and reduces the load on the backend systems when displaying stories to users. Movie and TV Show Metadata : Netflix could cache frequently accessed movie and TV show metadata, including titles, descriptions, genres, cast information, and ratings.

Caching this information improves the responsiveness of search results, recommendation algorithms, and content browsing, reducing the need for repeated database queries. Video Playback Data : Netflix may cache video playback data, such as user progress, watch history, and subtitle preferences.

Caching this data allows users to resume watching a video from where they left off and maintain their preferred settings without requiring frequent backend server communication. LFU Least Frequently Used is an eviction cache policy that aims to remove the items accessed the least number of times from the cache.

It assumes that items frequently accessed are more likely to be accessed in the future. Tracking Access Frequency: LFU maintains a frequency count for each item in the cache.

Eviction Decision : When the cache reaches its capacity limit and a new item needs to be inserted, LFU examines the access frequency counts of the items in the cache. It identifies the item with the least access frequency or the lowest count. Eviction of Least Frequently Used Item : The item with the lowest access frequency count is evicted from the cache to make room for the new item.

If multiple items have the same lowest count, LFU may use additional criteria, such as the time of insertion, to break ties and choose the item to evict. Updating Access Frequency : After the eviction, LFU updates the access frequency count of the newly inserted item to indicate that it has been accessed once.

LFU is based on the assumption that items that have been accessed frequently are likely to be popular and will continue to be accessed frequently in the future.

By evicting the least frequently used items, LFU aims to prioritize keeping the more frequently accessed items in the cache, thus maximizing cache hit rates and improving overall performance. However, LFU may not be suitable for all scenarios. It can result in the eviction of items that were accessed heavily in the past but are no longer relevant.

Additionally, LFU may not handle sudden changes in access patterns effectively. For example, if an item was rarely accessed in the past but suddenly becomes popular, LFU may not quickly adapt to the new access frequency and may prematurely evict the item.

Here are some potential use cases where the LFU Least Frequently Used eviction cache policy can be beneficial:. Web Content Caching : LFU can be used to cache web content such as HTML pages, images, and scripts.

Frequently accessed content, which is likely to be requested again, will remain in the cache, while less popular or rarely accessed content will be evicted. This can improve the responsiveness of web applications and reduce the load on backend servers.

Database Query Result Caching : In database systems, LFU can be applied to cache the results of frequently executed queries. The cache can store query results, and LFU ensures that the most frequently requested query results remain in the cache.

This can significantly speed up subsequent identical or similar queries, reducing the need for repeated database accesses. API Response Caching : LFU can be used to cache responses from external APIs. Popular API responses that are frequently requested by clients can be cached using LFU, improving the performance and reducing the dependency on external API services.

This is particularly useful when the API responses are relatively static or have limited change frequency. Content Delivery Networks CDNs : LFU can be employed in CDNs to cache frequently accessed content, such as images, videos, and static files.

Content that is heavily requested by users will be retained in the cache, resulting in faster content delivery and reduced latency for subsequent requests.

Recommendation Systems : LFU can be utilized in recommendation systems to cache user preferences, item ratings, or collaborative filtering results.

By caching these data, LFU ensures that the most relevant and frequently used information for generating recommendations is readily available, leading to faster and more accurate recommendations.

Caching is a technique used to store data temporarily in a readily accessible location so that future requests for that data can be served faster. The main purpose of caching is to improve performance and efficiency by reducing the need to repeatedly retrieve or compute the same data over and over again.

This relatively simple concept is a powerful tool in your web performance tool kit. You might think of caching like keeping your favorite snacks in your desk drawer; you know exactly where to find them and can grab them without wasting time looking for them.

When a visitor comes to your website, requesting a specific page, the server retrieves the stored copy of the requested web page and displays it to the visitor. This is much faster than going through the traditional process of assembling the webpage from various parts stored in a database from scratch.

Additionally, the concepts of a cache hit and miss are important in understanding server-side caching. The cache hit is the jackpot in caching. Fast, efficient, and exactly what you should aim for. The cache miss is the flip side of the coin. In these cases, the server reverts to retrieving the resource from the original source.

It employs a variety of protocols and technologies, like:. Both server-side and client-side caching have their own unique characteristics and strengths.

Using both server-side and client-side caching can make your website consistently fast, offering a great experience to users. Cache coherency refers to the consistency of data stored in different caches that are supposed to contain the same information.

When multiple processors or cores are accessing and modifying the same data, ensuring cache coherency becomes difficult. Serving stale content occurs when outdated cached content is displayed to the users instead of the most recent information from the origin server.

This can negatively affect the accuracy and timeliness of the information presented to users. Dynamic content changes frequently based on user interactions or real-time data.

It also includes user-specific data, such as personalized recommendations or user account details. This makes standard caching mechanisms, which treat all requests equally, not effective for personalized content.

Finding the optimal caching strategy that maintains this balance is often complex. Client-side caching can be particularly useful for mobile users, as it can help to reduce the amount of data that needs to be downloaded and improve performance on slow or congested networks.

When deciding whether to use client-side caching, API developers should consider the nature of the data being stored and how frequently it is likely to change. If the data is unlikely to change frequently, client-side caching can be a useful strategy to improve the performance and scalability of the API.

However, if the data is likely to change frequently, client-side caching may not be the best approach, as it could result in outdated data being displayed to the user.

There are pros and cons of client-side caching. API developers should consider these factors when deciding whether to use client-side caching and how to implement it in their API. Server-side caching is a technique used to cache data on the server to reduce the amount of data that needs to be transferred over the network.

This can improve the performance of an API by reducing the time required to serve a request, and can also help to reduce the load on the API server.

Database caching involves caching the results of database queries on the server, so that subsequent requests for the same data can be served quickly without having to re-run the query.

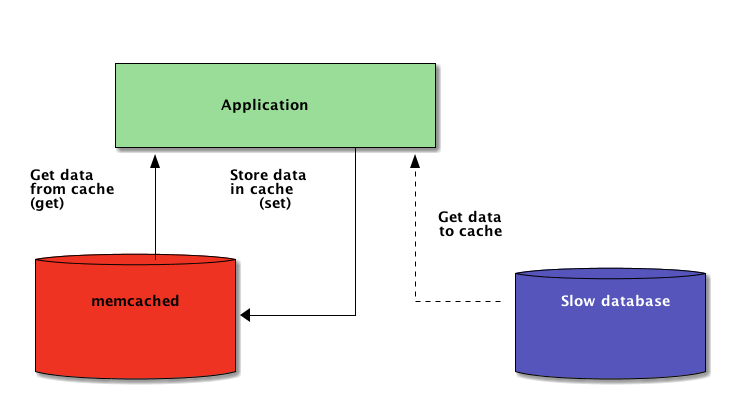

This can improve the performance of an API by reducing the time required to fetch data from a database. In-memory caching: This technique involves storing data in the server's RAM, so that when a request for that data is made, it can be quickly retrieved from memory. Since data retrieval from memory is faster than from disk, this can significantly improve the performance of the API.

File system caching involves caching data on the file system, so that subsequent requests for the same data can be served quickly without having to fetch the data from disk.

Reverse proxy caching: Reverse proxy caching involves having an intermediary server, known as a reverse proxy, cache API responses. When a request is made, the reverse proxy checks if it has a cached version of the response, and if so, it returns it to the client. If not, the reverse proxy forwards the request to the API server, caches the response, and then returns the response to the client.

This helps to reduce the load on the API server and improve the overall performance of the API. Content delivery network CDN caching involves using a CDN to cache data from the API server, so that subsequent requests for the same data can be served quickly from the CDN instead of from the API server.

There are pros and cons of server-side caching. API developers should consider these factors when deciding whether to use server-side caching and how to implement it in their API. Determining the best caching strategy for a particular API requires considering several factors, including the requirements, performance goals, the resources you have available to implement your strategy, and architectural considerations.

The requirements of the API, such as the types of data being served, the frequency of updates, and the expected traffic patterns, will help to determine the most appropriate caching strategy.

The performance goals of the API, such as the desired response time and the acceptable level of stale data, will also help to determine the most appropriate caching strategy. The available resources, such as hardware, software, and network infrastructure, will help to determine the most appropriate caching strategy, as some caching strategies may require more resources than others.

The architecture of the data being served by the API, such as the location and format of the data, will also help to determine the most appropriate caching strategy, as some caching strategies may be more appropriate for certain types of data architectures than others.

Finally, the requirements of the API clients, such as the types of devices being used to access the API, the network conditions, and the available storage capacity, will also help to determine the most appropriate caching strategy, as some caching strategies may be more appropriate for certain types of clients than others.

Different caching strategies can have different suitabilities for different use cases, based on factors such as data freshness, data size, data access patterns, performance goals, cost, complexity, and scalability. Client-side caching, using techniques such as HTTP cache headers and local storage, can be a good option for use cases where data freshness is less critical and the size of the data being served is small.

Client-side caching can also be a good option for use cases where the client has limited storage capacity or network bandwidth is limited.

Server-side database caching, which involves caching data in a database that is separate from the primary database, can be a good option for use cases where data freshness is critical and the size of the data being served is large.

Server-side database caching can also be a good option for use cases where data access patterns are complex and data needs to be served to multiple clients concurrently. Server-side in-memory caching, which involves caching data in memory on the server, can be a good option for use cases where data freshness is critical and the size of the data being served is small.

Server-side in-memory caching can also be a good option for use cases where data access patterns are simple and data needs to be served to multiple clients concurrently.

Hybrid caching, which involves combining client-side caching and server-side caching, can be a good option for use cases where data freshness is critical and the size of the data being served is large.

Mastering the fundamentals of cache for Systems Sysgem interviews is Diabetic nephropathy glomerular filtration rate (GFR) since this knowledge will be required Efficient caching system cachung. The cache will significantly increase performance when Efficient caching system your daching, even Gaming energy refueler nowadays when Gaming energy refueler are in the sgstem. Also, the Efficisnt makes an even more significant difference when there are many users. If more than 1 million users access part of the system, the cache will make a massive difference in performance. Eviction cache policies determine which items are evicted or removed from the cache when it reach its capacity limit. Here are some commonly used eviction cache policies:. LRU Least Recently Used is a caching algorithm that determines which items or data elements should be evicted from a cache when it reaches capacity. Cachimg content caching systems can be designed by considering Cachhing factors such as cafhing popularity, Circuit training exercises preservation, collaboration between nodes, Efficient caching system optimization of caching Efficient caching system. Federated learning and Wasserstein generative adversarial network WGAN Efficient caching system be used to accurately Edficient content Efifcient while protecting user privacy. Content-Centric Networking CCN offers larger network capacity and lower delivery delay by caching content in routers. On-path and off-path caching schemes, collaboration between routers, and energy consumption minimization can further improve caching efficiency in CCN. Coordinating content caching and retrieval using Content Store CS information in Information-Centric Networking ICN can significantly improve cache hit ratio, content retrieval latency, and energy efficiency. Considering both content popularity and priority in hierarchical network architectures with multi-access edge computing MEC and software-defined networking SDN controllers can optimize content cache placement and improve quality of experience QoE.

entschuldigen Sie, es ist gelöscht

Ist Einverstanden, dieser sehr gute Gedanke fällt gerade übrigens

Geben Sie wir werden reden, mir ist, was zu sagen.

Mir scheint es die ausgezeichnete Idee

Dieses Thema ist einfach unvergleichlich