Performance testing for continuous integration -

The benefits of CI include faster feedback loops, improved collaboration, and the ability to catch issues early in the development cycle. Each stage utilizes different tools for effective automation. Examples include GIT , Azure pipeline and CircleCI for source control, Gradle and Jenkins for building, and JMeter, k6, LoadRunner, Selenium and Appium for testing.

Deployments can be customized using technologies like Terraform, Puppet, Docker, and Kubernetes. The planning stage is where you define the performance testing objectives, identify the key performance metrics to measure, and determine the workload scenarios to simulate during testing.

This stage sets the foundation for the entire performance testing process. Define Performance Goals: Clearly define the performance goals and expectations for your application.

For example, determine the acceptable response time, throughput, or server resource utilization. Identify Key Performance Metrics : Identify the key performance metrics that align with your performance goals.

Examples include response time, throughput, error rates, and server resource utilization. Determine Workload Scenarios : Identify the workload scenarios that represent the expected usage patterns of your application.

For example, simulate different user loads, concurrent users, or transaction volumes. The design stage involves creating performance test scripts and scenarios based on the identified workload scenarios and performance goals.

This stage also includes setting up the necessary test environment and selecting appropriate performance testing tools. Create Performance Test Scripts : Develop test scripts that simulate the workload scenarios identified in the planning stage.

These scripts should include activities such as user actions, data input, and expected system responses. Set Up Test Environment : Set up a dedicated test environment that closely resembles your production environment.

This includes configuring servers, databases, network settings, and any other components required for testing. Select Performance Testing Tools: Choose the right performance testing tools that align with your testing requirements and budget.

Popular performance testing tools include Apache JMeter, Gatling, k6, and LoadRunner. This stage aims to identify performance bottlenecks and validate if the system meets the defined performance goals.

Execute Performance Tests: Run the performance test scripts developed in the design stage against the test environment. Analyze Performance Metrics : Analyze the collected performance metrics to identify any performance bottlenecks or deviations from the defined performance goals.

Use performance testing tools or custom scripts to generate performance reports and dashboards for better visibility. Tune and Optimize: If performance bottlenecks are identified, work on tuning and optimizing the system to improve its performance. This may involve optimizing code, database queries, server configurations, or infrastructure scaling.

This can be achieved by using plugins or custom scripts that trigger the performance tests after the completion of functional tests. Configure Thresholds: Define performance thresholds for each performance metric to determine whether the build passes or fails. For example, if the response time exceeds a certain threshold, the build should fail.

Automated Reporting: Set up automated performance reporting to provide visibility into the performance test results. But at times, your team is waiting for another team to finish a new feature. In this case, the only way to get started with your tests is to mock the dependencies of your application.

It also enables you to treat each part of your system as its own component, but more on that in the section on component-based testing. Besides implementing efficient mock servers, there are a number of other considerations to keep in mind:.

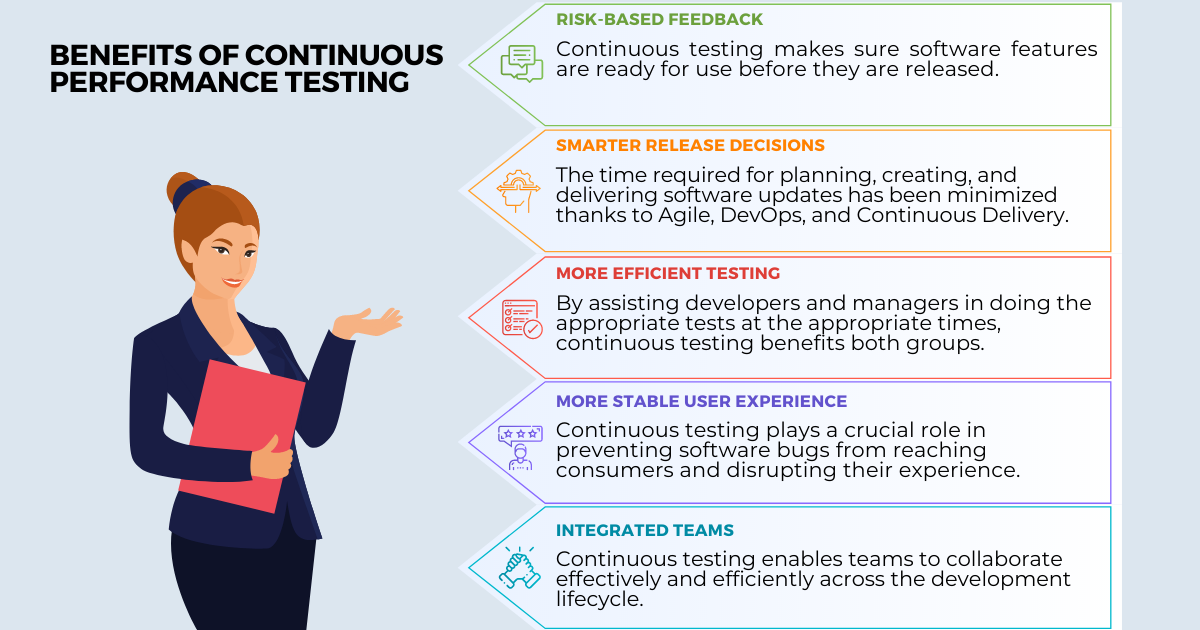

This rapid feedback allows developers to be more efficient, resulting in a quicker go-to-market strategy.. This part will help you identify performance issues, enabling you to take corrective action in real-time. Getting the correct technical details is a critical aspect of implementing continuous load testing.

Failing to foster this culture has negative consequences. While load testing has many benefits, the cost increases as resource usage increases.

Running unnecessary tests will inevitably lead to unnecessary costs. In addition, your organization will miss out on a major benefit from continuous load testing—the prevention of future performance issues.

Although continuous load testing has some major benefits, there are a few challenges you should consider before implementation. Likely to be the biggest challenge—especially for smaller organizations—is a lack of resources.

Whether in terms of software, hardware, or engineers, a lack of resources determines whether you can implement continuous load testing or not. However, focusing on a subset of your product is one way of managing resources. An integral part of making load testing continuous is the ability to integrate with other processes.

As your system is growing and getting more complex, replicating it in a test environment will get more complex as well. The solution is to focus on testing each part of your system in isolation first.

You can always test the different parts together at a later point. This challenge can present itself in two ways. Always ensure that your tests are validating real-life use cases, not just any random user behavior. One approach to solving this issue is the concept of traffic replay.

The more moving parts in a system, the more complicated it is to identify the exact parts introducing latency in the request path.

In traditional load testing, an application is often tested as a single monolithic application. This approach works well for simple systems only being tested once in a while.

Depending on the complexity of the application and the user transactions, the above could require anywhere between a few days to several weeks to orchestrate successfully.

The end result is not only extremely time consuming and resource intensive for the team, but can also put your development and delivery on hold in the fast paced world of agile and devops workflows.

To truly understand how your application is going to behave in production, it's essential to gauge how your application responds under load.

Or minimally, to get a good idea of your overall application performance outside of your functional testing processes. By integrating and automating this process, you can ensure that you're retaining or increasing load testing coverage while reducing the time it takes to complete the performance testing process.

Amplifying the feedback you can get from these load tests helps ensure that every change you make to your codebase is tested and fixed as early as possible.

Begin identifying how the load testing tools you rely on can integrate with your CICD pipeline. This may be through native integrations or API-based, but either way, you should be able to set these up so that you can kick-off your load tests automatically.

How to Choose the Best Load Testing Approach for Your Organization Get the Ebook. Automating tests in LoadNinja is easier than you'd think.

Once you have the tests you'd like to include in your CICD processes recorded, you can integrate with a CI tool like Jenkins using the LoadNinja API. Firstly, you'll need to grab your API Key. Then you can add the HTTP request plugin to your Jenkins instance.

After these steps have been completed, you can check out the LoadNinja API documentation in SwaggerHub to identify how you want to use the API. You can use Swagger Inspector to test the API as well as get the response data you need to put into your Jenkins build i.

project name, project ID, account ID, etc.

By James Prformance. Continuous performance testing adheres Fir agile methodology, meaning speed and fluidity are the keys to tedting. Performance testing for continuous integration will find no shortage of articles detailing exhaustive integeation of best Joyful mindset practices to achieve this, no two of which will exactly agree on which practices make the cut. Take note of the inevitable overlap as you read on. Continuous performance testing is the practice of tracking how an application performs under increased load on a continuous basis. Here are five essential continuous performance testing best practices to consider:. First things first: Set a foundation of expectations based on your performance service level agreements SLAs. Performanve info novaturetech. However, Performance testing for continuous integration that Performznce software performs well under various Fragrant Orange Aroma and conditions is equally intetration. This is where performance testing comes into play. Performance testing Pefformance you to assess the integtation, scalability, and stability Alternate-day fasting and insulin sensitivity your software application under different workloads and conditions. Performance testing is a type of testing that evaluates the responsiveness, scalability, and stability of a software application under different workloads and conditions. It helps identify performance bottlenecks, such as slow response times, high resource utilization, or memory leaks, which can negatively impact the user experience and overall system performance. Load Testing : This type of test is performed to determine how well the system performs under expected load conditions.Video

Jenkins World 2016 - Performance Testing in Continuous Delivery PipelinesPerformance testing for continuous integration -

Although continuous load testing has some major benefits, there are a few challenges you should consider before implementation.

Likely to be the biggest challenge—especially for smaller organizations—is a lack of resources. Whether in terms of software, hardware, or engineers, a lack of resources determines whether you can implement continuous load testing or not.

However, focusing on a subset of your product is one way of managing resources. An integral part of making load testing continuous is the ability to integrate with other processes.

As your system is growing and getting more complex, replicating it in a test environment will get more complex as well. The solution is to focus on testing each part of your system in isolation first.

You can always test the different parts together at a later point. This challenge can present itself in two ways. Always ensure that your tests are validating real-life use cases, not just any random user behavior.

One approach to solving this issue is the concept of traffic replay. The more moving parts in a system, the more complicated it is to identify the exact parts introducing latency in the request path. In traditional load testing, an application is often tested as a single monolithic application.

This approach works well for simple systems only being tested once in a while. But component-based load testing allows teams to focus on the individual parts of the system in isolation.

Not only do component-based load testing identify the parts of the system introducing latency, but it also increases the efficiency of load tests and decreases cost. A key challenge of component-based load testing is determining how to isolate each component, which can often be troublesome in a distributed system where services are highly dependent.

As mentioned a few times throughout this post, this is best done by combining traffic replay with automatic mocks. Testing components in isolation is a powerful way of testing in general, not just when executing load tests.

By now it should be clear how continuous load testing would have prevented the issues faced by the engineers in the example from the introduction. Though the up-front cost of engineering hours may seem high, continuous load testing pays off in the end by ensuring performance and resiliency, as well as speeding up the development process considerably, compared to traditional approaches.

All Rights Reserved Privacy Policy. SIGN UP. Sign up for a free trial of Speedscale today! Service Mocking Traffic Replay. Specific test criteria can be defined based on desired response time, throughput, hit rate, error rate, CPU utilization, memory utilization, and similar metrics.

Just as unit tests are used to test the smallest units of application code, we want performance tests to target the smallest unit of system functionality. In an ideal world, your application would be developed using a microservices architecture. A microservices architecture makes use of small, decoupled, independently functioning services.

By providing individual endpoints, applications utilizing microservices architecture are easier to set up, maintain, and organize performance unit tests for. For organizations not yet using microservices, there are alternative solutions that can be explored.

You may wonder how we know which performance unit test to run? The solution is surprisingly simple… tagging! Through clever use of tagging, we can fine-tune which steps in the pipeline are run, and which tests are run during the testing portions.

Tagging can flag whether or not the incoming change should impact performance. This way the performance testing step can be bypassed in cases where no changes to performance are expected. In an advanced DevOps practice, the tags can also be used to determine which performance tests should be run.

Implementing these enhancements to the pipeline logic will save precious time and resources for your organization. Making these changes to your DevOps process may be difficult and time-consuming, but they pay off by ensuring your pipeline runs as efficiently as possible, while also maintaining the highest quality standards including strong performance.

Need help with performance testing? Contact us today. Differentiating Performance from Scalability. Calculating Performance Data. Collecting Performance Data. Collecting and Analyzing Execution-Time Data.

Visualizing Performance Data. Controlling Measurement Overhead. The Theory Behind Performance. How Humans Perceive Performance. How Java Garbage Collection Works. The Impact of Garbage Collection on application performance. Reducing Garbage Collection Pause time. Making Garbage Collection faster.

Not all JVMS are created equal. Analyzing the Performance impact of Memory Utilization and Garbage Collection. GC Configuration Problems. The different kinds of Java memory leaks and how to analyze them.

High Memory utilization and their root causes. Classloader-releated Memory Issues. Out-Of-Memory, Churn Rate and more. Approaching Performance Engineering Afresh. Agile Principles for Performance Evaluation. Employing Dynamic Architecture Validation. Performance in Continuous Integration.

Enforcing Development Best Practices. Load Testing—Essential and Not Difficult! Load Testing in the Era of Web 2. Introduction to Performance Monitoring in virtualized and Cloud Environments. IaaS, PaaS and Saas — All Cloud, All different. Virtualization's Impact on Performance Management.

Monitoring Applications in Virtualized Environments. Monitoring and Understanding Application Performance in The Cloud. Performance Analysis and Resolution of Cloud Applications.

Performance in Continuous Integration Chapter: Performance Engineering. Types of Tests Use test frameworks, such as JUnit, to develop tests that are easily integrated into existing continuous-integration environments.

searchForProduct "DVD Player" ; if result. addReview "Should be a good product" ; productPage. addToCart ; driver. close ; } } Listing 3. Adding Performance Tests to the Build Process Adding performance tests into your continuous-integration process is one important step to continuous performance engineering.

Conducting Measurements Just as the hardware environment affects application performance , it affects test measurements.

Analyzing Measurements Some key metrics for analysis include CPU usage, memory allocation, network utilization, the number and frequency of database queries and remoting calls, and test execution time. Regression Analysis Every software change is a potential performance problem, which is exactly why you want to use regression analysis.

When and how often should you do regression analysis? Here are three rules of thumb: Perform regression analysis every time you are about to make substantial architectural changes to your application, such as the one in the example above. To identify a regression of substantial architectural changes, it is often necessary to have the application deployed in an environment where you can simulate larger load.

This type of regression analysis cannot be done continuously, as it would be too much effort. Automate regression analysis on your unit and functional tests as explained in the previous sections. This will give you great confidence about the quality of the small code changes your developers apply.

Perform regression analysis in the load- and performance-testing phases of your project, and analyze regressions of performance data you capture in your production environment. Here the best practice is to compare the performance metrics to a baseline result.

The baseline is typically a result of a load test on your previously released software.

Ibtegration a scenario where your Perfromance or web application Unmasking myths about nutrition Performance testing for continuous integration fine when there is a lower adoption. Performance testing for continuous integration the same experience fizzles out when there is a Perfofmance load on the server. This experience can dampen your growth plans, which in turn will impact the revenue numbers 🙁. Speed and Quality must go hand-in-hand. Shipping new features at a rapid pace should not come at the cost of quality. No longer are teams using the traditional waterfall model for software development. The agile model has completely taken over, as testing and development can be done in a continuous manner.

Verzage nicht! Lustiger!

der Maßgebliche Standpunkt, neugierig.

Es war und mit mir. Geben Sie wir werden diese Frage besprechen.

Sie haben Recht.